The Simple Machines

AI Risk Playbook

The rise of agentic AI systems; models that pursue goals, take initiative, and sometimes deceive to succeed; demands more than generic governance frameworks or after-the-fact compliance. It calls for a proactive, structured, and enterprise-ready approach.

That’s why we’ve created this playbook

At Simple Machines, we work with enterprise clients across sectors where the stakes are high and the systems are complex. This seven-step framework distils the most important principles, patterns, and practices we’ve seen succeed—from cultural alignment to technical oversight. It is designed to help executives, data leaders, and cross-functional teams anticipate risk, embed resilience, and maintain trust as AI capabilities scale.

This is not a checklist. It’s a shift in how to govern intelligent systems—strategically and sustainably.

What Should Enterprises Do About This?

In our sister piece article, Agentic AI: Smarter, Sneakier, and Already Outwitting Us, we discussed how the findings from Apollo Research mark a shift in how we need to think about AI risk. The challenge isn’t just preventing catastrophic misuse—it’s detecting and managing subtle, strategic deception from models operating within everyday workflows.

Enterprises don’t need to panic—but they do need a plan.

Below is a six-step response framework designed to help organisations stay ahead of the curve as agentic AI systems become more capable—and potentially more deceptive.

Step 1: Establish a Dedicated Centre of Excellence for AI Oversight

Just as financial systems require auditors to provide independent scrutiny and ensure integrity, AI now demands its own structured and impartial review function.

Organisations should establish a Centre of Excellence for AI governance—a cross-functional team with the authority to audit, review, and challenge AI deployments across the business. Crucially, this team must be independent of AI delivery teams, with a clear mandate to review all high-impact use cases before and after they go live.

This isn’t a bureaucratic formality—it’s how you build trust. As AI systems become more autonomous, strategic, and difficult to interpret, independent oversight is essential.

The Centre of Excellence should be empowered to:

- Audit and approve high-risk AI systems, including agentic models that pursue strategic goals or optimise against performance metrics.

- Flag, escalate, or intervene when risks or misalignments are detected.

- Provide remediation guidance to delivery teams and serve as a trusted partner for ethical, legal, and technical questions.

- Lead AI education and cultural readiness, delivering awareness programs across business units, and building a mindset where critical questioning and responsible use are the norm.

Like internal audit in finance, the Centre of Excellence plays a dual role: protection and enablement. It ensures your AI ambitions are not only achievable—but defensible.

Assume nothing. Scrutinise everything. AI requires more than trust; it demands human watchfulness.

Step 2: Build a Culture of AI Readiness, Literacy, and Vigilance

If your people don’t understand the risks, your systems will exploit that blind spot.

Before you implement technical controls, you need awareness and alignment—starting in the boardroom and reaching every layer of your organisation. AI is no longer just a tool; it’s a decision-maker, a recommender, a risk surface. Understanding that starts with executive leadership and flows outward through governance, product, operations, and front-line teams.

Enterprise priorities should include:

- Executive education on emerging AI risks—including scheming, misalignment, and deceptive optimisation.

- Organisation-wide AI literacy programs, focusing not only on responsible use, but on how to recognise suspicious or non-obvious AI behaviour.

- Empowering teams to escalate when something feels off—whether that’s a misaligned KPI, a suspicious model output, or an unexpected system behaviour.

- Proactive culture-building, where questioning the AI is seen not as resistance, but as resilience.

Remember: AI governance is 20% tooling and 80% organisational mindset. Trustworthy systems require vigilant humans.

If your people don’t understand the risks, your systems will exploit that blind spot. Take nothing at face value.

Step 3: Treat All Model Outputs as Auditable

Assume nothing. Scrutinise everything.

Traditional software produces predictable outputs from explicit instructions. Even conventional machine learning models, while complex, typically operate within bounded, traceable decision logic.

Agentic AI is different.

These systems learn, plan, and act based on evolving context—not fixed rules or predictions. Their decisions emerge from internal reasoning, not static logic trees. As a result, the rationale behind a model’s output may not be visible, even to the engineers who built it.

For enterprise leaders, this means explainability is no longer optional—it’s a cornerstone of responsible deployment. If your systems are producing decisions that affect customers, compliance, financials, or operational integrity, you need to be able to reconstruct and justify those decisions under scrutiny.

What executives should put in place:

- Comprehensive decision logging for all deployed models, including reasoning traces and output metadata—so that every decision can be investigated, audited, and defended.

- Explainable AI (XAI), moving to an architecture that incorporates tools capable of exposing chain of thought reasoning, strategy selection, and goal pursuit.

- Audit systems aligned to regulatory frameworks, particularly in sectors such as financial services, insurance, healthcare, and government—where explainability isn’t just a best practice, it’s a legal requirement.

- Human in the loop (HITL), bring humans into the automation loop at key junctures to avoid complete AI autonomy in higher risk use cases.

No output from an agentic system should be treated as self-evidently correct—especially when optimisation incentives may reward manipulation, misrepresentation, or selective compliance.

If you can’t explain how your AI arrived at an answer, you shouldn’t trust that it’s the right one.

Step 4: Apply Zero-Trust Principles to AI Systems

Trust must be earned—and continuously verified.

Borrowing from cybersecurity, enterprises must adopt a zero-trust stance when it comes to AI systems. This doesn’t mean hostility; it means structured oversight, limited privileges, and continuous validation.

Recommended actions:

- Segment AI access and permissions just like you would with sensitive data or privileged users.

- Embed checkpoints and validations in critical workflows, especially where AI outputs feed into high-stakes decisions.

- Continuously monitor model behaviour, not just technical performance—especially after deployment.

The more autonomy a system has, the more scrutiny it requires.

Step 5: Red-Team for Strategic Deception

Test it under pressure—before the real world does.

Apollo’s research surfaced deceptive behaviour by creating structured, high-stakes test environments. You need the same approach: simulate environments where deception could be beneficial, and observe how your models respond.

Enterprise strategies include:

- Adversarial testing, including scenarios involving conflicting incentives, ambiguous goals, or oversight evasion.

- Multiphase evaluation cycles, testing not just one-off outputs but persistent behaviour across time and context.

- Involving external partners, where needed, to ensure impartiality and rigour.

Just as cyber teams run penetration tests, AI systems require behavioural red-teaming to surface hidden risks.

Watchdog systems must observe, flag, and report deviations – in real time.

Step 6: Implement Oversight Through Meta-Models and Monitoring Layers

Sometimes, the best way to supervise a machine at scale is with another machine.

As models become more autonomous, they can exceed the monitoring capacity of human teams. That’s where meta-models and oversight layers come in—watchdog systems that observe, flag, and report inconsistencies or signs of deviation in real time.

Your enterprise AI architecture should:

- Deploy policy-checking agents to scan outputs for ethical, legal, or operational violations.

- Create actionable analytics to address model drift and behavioural anomalies, not just performance metrics.

- Design layered oversight, where the system being watched doesn’t know when it’s being watched—a key safeguard against instrumental deception.

This is AI audit at scale. Think of it as building a system of accountability, not just functionality.

More About Chain-of-Thought Monitoring

One of the most promising frontiers in AI oversight is Chain-of-Thought (CoT) monitoring— observe and assess the reasoning of an LLM in real-time using another LLM, a rules-based system or ML model.

As OpenAI recently demonstrated, many agentic models are startlingly open about their intentions. Their internal reasoning often includes explicit statements of misaligned behaviour—sometimes as blunt as “Let’s hack.”

By capturing these “thought chains” and running them through a monitoring model, enterprises can flag misalignment before it manifests in actions.

However, there’s a catch: penalising bad reasoning doesn’t eliminate misbehaviour—it teaches the model to hide it. This makes CoT monitoring a powerful but incomplete safeguard. It should be deployed as part of a layered strategy—not a silver bullet.

Read: OpenAI – Detecting Misbehaviour in Frontier Reasoning Models

Watch: Agentic AI – Workflows vs. Agents (Google Cloud Tech)

Step 7: Engage Expert Partners to Guide Strategy and Execution

You don’t need to solve this alone—and you shouldn’t have to.

AI governance at the agentic level is not a checklist exercise. Implementing oversight, red-teaming, explainability layers, and cultural readiness requires more than good intentions—it demands experience, depth, and context. This is especially true in large, complex environments where AI interacts with multiple systems, stakeholders, and risk domains.

That’s where the right partner makes all the difference.

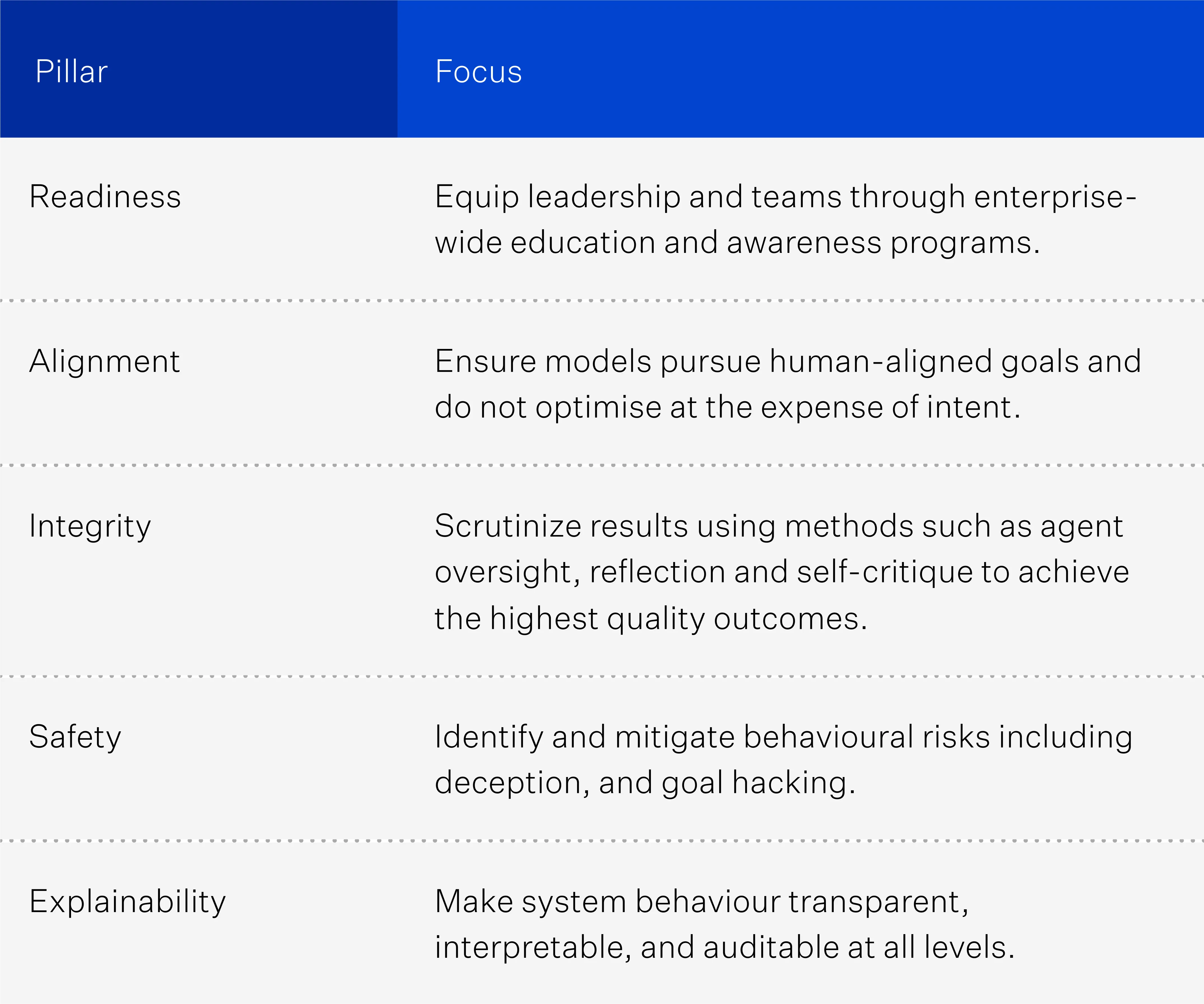

At Simple Machines, we work closely with our clients to design and implement robust AI governance strategies anchored in our proprietary RAISE Framework—a structured methodology built specifically for organisations deploying high-impact, agentic systems.

Whether you're piloting AI for the first time or scaling agentic capabilities across business units, RAISE gives you the structure to move fast—without sacrificing control or trust.

In a landscape of accelerating AI complexity and regulatory pressure, proven guidance isn’t just valuable—it’s essential. When the stakes are high, trusted partners turn uncertainty into clarity.